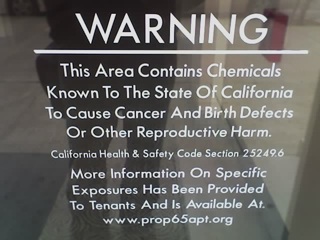

We've rented an apartment in San Francisco, so visiting Sydney employees have somewhere to stay. According to this warning on the front door, there's something inside, somewhere that will give us cancer and cause our children to be born with three heads.

We've rented an apartment in San Francisco, so visiting Sydney employees have somewhere to stay. According to this warning on the front door, there's something inside, somewhere that will give us cancer and cause our children to be born with three heads.

The sign directs us to a helpful website that promises to explain why the building comes with such a dire warning.

Except it doesn't. The site in question just rattles off a laundry-list of possible culprits, ranging from the everyday ("Any time organic matter such as gas, charcoal or wood is burned, Proposition 65-listed chemicals are released into the air"), to the concerning ("Construction materials... contain chemicals, such as formaldehyde resin, asbestos, arsenic, cadmium and creosote.")

So yeah. Either I'm breathing asbestos and guzzling arsenic every day, or some of the apartments have gas stoves. Thank you very much, Proposition 65.

The vagueness of the sign, plus the fact that you can find the same words on half the buildings in the city, makes it entirely useless for any purpose beyond:

- Covering the State's ass in case of litigation

- Cultivating a vague feeling of helplessness and dread

An effective warning sign must:

- Effectively communicate any immediate danger

- Give the reader enough information to evaluate the risk, and determine how to mitigate that risk

The first is important because with some dangers, by the time you've taken the time to evaluate the risk, it's too late. If someone's about to walk over a cliff, you might only have time to yell "STOP!"

The second is important because shouting "STOP!" only lasts a moment. Once you've got someone to stop, you have to tell them why. Otherwise they're either just going to shrug and step forward again (and probably not listen to you the next time you scream a warning at them), or they're going to decide that the risk of cancer is too great and never step inside a building in California.

With that in mind, let's look at two recent well-publicised computer security issues, and ask the question: "Were these effective warning signs?"

1. The MacBook WiFi Hack

In an article entitled Hijacking a Macbook in 60 Seconds, Washington Post reporter Brian Krebs (whose work has has featured in this blog before) presents a video of hacker David Maynor demonstrating a remote root access exploit on a Macbook notebook through a WiFi device driver. Krebs explains that this is in fact a general, cross-platform vulnerability in device drivers that affects multiple WiFi vendors across Windows, Mac OS X and Linux.

Did this warning effectively communicate the immediate danger? Well, no. Instead of choosing a headline and angle that accurately reflects the message they're trying to communicate -- that there is a real danger to using WiFi on any platform -- the article instead screams "MacBook Hacked!"

This immediately muddies the water, because instead of communicating an important warning, you've instead prompted a large segment of Windows users to say "Yeah, take that you arrogant Mac bastards", and an equally large segment of Mac users to say "Hey! This is bullshit!" Any real point is lost in the ensuing partisan bloodshed.

Did the warning communicate enough information for third parties to evaluate the risk they were facing? No again. There was such a huge disparity between the exploit that was claimed -- a general vulnerability across platforms and vendors -- and the exploit that was demonstrated -- a single hack against an incredibly unlikely combination of hardware -- that readers were left puzzled as to what was actually being demonstrated at all?

Why demonstrate the hack against a third-party WiFi card, when nobody would ever use such a card with the Airport-enabled MacBook? If the exploit were truly cross-platform, why not take the additional sixty seconds to demonstrate the inverse, and show the MacBook hacking into the Dell? A couple of spectacularly unconvincing explanations were offered, and now even SecureWorks, Maynor's employer, prefaces the video with a disclaimer, warning that the exploit being demonstrated differs significantly from the exploit being claimed:

This video presentation at Black Hat demonstrates vulnerabilities found in wireless device drivers. Although an Apple MacBook was used as the demo platform, it was exploited through a third-party wireless device driver - not the original wireless device driver that ships with the MacBook. As part of a responsible disclosure policy, we are not disclosing the name of the third-party wireless device driver until a patch is available.

The practical upshot of this particular security warning? Two weeks later I was at WWDC, Apple's developer conference. Present were thousands of Macintosh laptops, including my own, all logging on to the conference's open WiFi with a carefree abandon.

2. The Ruby on Rails Vulnerability

Last week, word got around the blogosphere: Ruby on Rails 1.1.0 through 1.1.4 were vulnerable to a potentially nasty security exploit, and everyone was recommended to upgrade to version 1.1.5 immediately. More information would be revealed as soon as everyone had a chance to upgrade.

This warning at least satisfied the first criterion. It effectively communicated the immediate danger, and gave you something you could do Right Now to prevent it. Unfortunately:

- It was the wrong fix: the next day, everyone who had rushed to upgrade to 1.1.5 was told to upgrade again to 1.1.6.

- Because of the lack of disclosure, the simpler workaround (adding a simple apache rewrite rule) was not made public at the time of the first announcement, so anyone who was not willing (or able) to upgrade their production Rails installations without any testing or burn-in time was left out in the cold

- When the vulnerability was finally disclosed, it was introduced with the phrase "The cat is out of the bag", suggesting that someone outside the core Rails camp had already done the obvious, and worked out what the flaw was by examining what changed between 1.1.4 and 1.1.5, something that any potential bad guy could have done just as easily.

Often, full disclosure is explained as a way to make sure vendors are responsive, using "naming and shaming" to force a faster patch schedule. This is certainly one aspect of the practice, but far more important is the fact that it gives those people who might be running the vulnerable software enough information to make informed decisions about their security.